The manufacturing control of the future is here as an AI evolution of statistical process control, and Nanotronics is the first to see it.

By Damas Limoge, Senior R&D Engineer, Nanotronics

Due to human limits and blind spots, and static process control, manufacturing errors often go undetected or unreported and are propagated. To make factories more efficient, resilient, and secure, we need to begin by reconsidering how errors are detected and remedied in the assembly line by using a more dynamic approach. Artificial Intelligence Process Control (AIPC) finds solutions to defects in near real-time, during the manufacturing process.

The conventional manufacturing control paradigm has persisted for the better part of a century, with roots in statistical process control (SPC) originating in the early 1920’s through the work of Walter A. Stewhard and W. Edwards Deming. The concept is simple enough: dichotomize the source of variations into two categories in the interest of minimizing deviations in quality. The first category, common sources of variation, are intrinsic to the process and cannot be overcome in the original conceit. The second category, special sources, are extrinsic to the process and can be eliminated with additional engineering and adept process design.

The manufacturing world received the dividends of minimizing special variation sources almost immediately, as factories became more efficient and process yields were ever increased. Through simple feed-forward approaches to outer-loop control, and an acute focus on minimizing these assignable sources of variation, the output distributions of our factories normalized to predictable, well-behaved modes of operation.

The original sin of SPC, however, was its requirement for process completion prior to corrective action. Only after a statistically significant sample of quality data was measured, could an engineer make any statement about the likelihood of a special source of variation. This method still aligns well with the modern reality of massive volumes of data produced by a factory process: our factories are now built with an intuition for the value of data, using vast networks of interconnected sensors and data acquisition hardware. To this point, however, that data is only used in repurposing the old methods of SPC. The opportunity for a new approach, one that is reactive to both special and common sources of variations, is now greater than ever.

We capitalized on that opportunity, and developed a framework called Artificial Intelligence Process Control (AIPC). AIPC flips SPC on its head and inserts the engineering intuition of corrective action into the active process control. Of course, feedback loops have existed nearly as long as SPC, but the loops are often tightly structured around linear phenomena like controlling the temperature of a heating plate or the flow rate of a constituent ingredient. The set-points of these controllers is what AIPC targets, adjusting not at the granular level but at the top-level instructions often prescribed by a well-studied engineer. The inclusion of the engineer in the loop was essential to synthesizing, maybe wrangling, the continuously increasing torrent of data streams, often nonlinear in their correlative relationships. The downside is the emergent latency of variational forensics, beholden to the slow, measured nature of human consideration. AIPC solves this latency by imprinting the useful sliver of human intelligence responsible for the process intuition into an inferential agent capable of effectively managing the dense data streams in real-time, while taking corrective action in response to normal variations that are usually ignored.

As a miniaturized, analogous representation of a full-scale factory, our research targeted the ubiquitous platform of extrusion-based additive manufacturing, commonly referred to as a 3D printer. The 3D printer has all the process elements of a large factory, minus the multi-billion dollar capital investment. Its actuation is through an extruder head that deposits plastic over predesignated paths, layer by layer, eventually manifesting as a small, plastic bauble. The printed parts suffer from special variation, in the form of machine wear-down or improper calibration, which can be effectively minimized with a proper maintenance schedule. More importantly, the parts suffer from normal variation of pockets or voids within the printed layers that affect the material properties to the point of relegating the manufacturing technology to prototypes and proofs of concept.

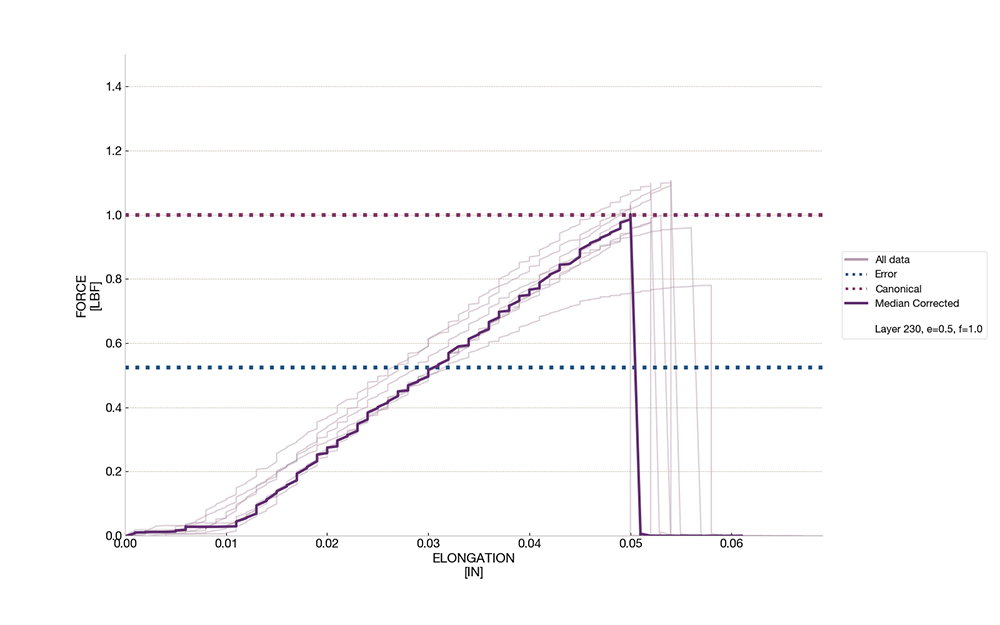

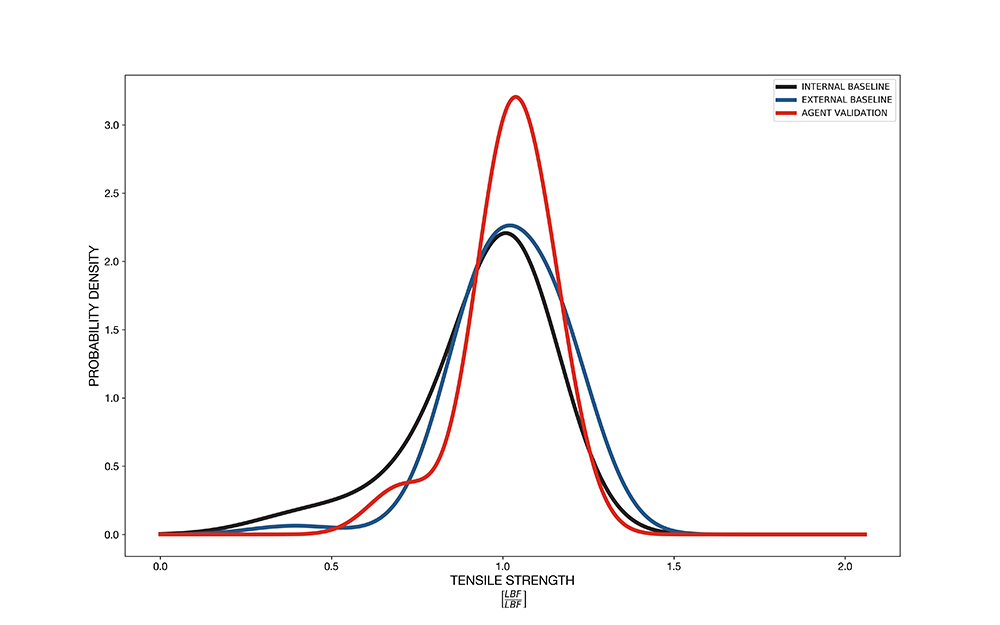

We built a system that gathered images of the surface of the part for every layer that was printed. This is the equivalent of photographing parts during manufacturing or considering the raw sensor data as a snapshot of the process in a factory. We created two case studies to relate the environment back to the standards of SPC; the first of which was injecting a special error into the print, and the second of which was continuous control reacting to normal errors. The errors contribute to deviations in the mean of the final quality metric of the parts, chosen as tensile strength for this research. It is important to note that our research was not attempting to maximize the tensile strength, but instead minimize variation from an accepted, canonical tensile strength for every printed part.

The case study which considered a special error used the images to detect a binary pass or fail classification of the layer, and a failure label led to a subsequent classification of the type and magnitude of the error. This second classification was used as the basis for a state fed into a reinforcement learning agent that would choose corrective actions for three subsequent layers. We were able to successfully correct for these types of special errors and validate the corrective actions by returning the parts to their canonical tensile strength.

The second case study, which considered normal variation leading to quality deviations, used images of each layer as an input to an encoder, trained in an unsupervised manner, to extract features of normal variations. These features were the state input for another reinforcement learning model that was trained offline, and in an off-policy fashion, to choose a corrective action for every subsequent layer. Through a judicious reduction of the complexity of the environment, namely minimizing the number of actuation points by choosing actions highly correlated to the final quality, and a savvy sampling distribution design during training, our agent was able to substantially increase the likelihood of a canonical tensile strength of a printed part. This solves the previously ignored existence of normal variation in SPC and allows intelligent corrections to be made before the part has been finished.

Every step of this research kept a vigilant eye towards wider applications. The concept of a layer, or discrete step, is a universal element of manufacturing, with each time sample or assembly station representing a new opportunity to correct normal variations. The use of image data extends to our Nanotronics hardware, with optical inspection tools available in affordable packages, but also more widely abstracts to the dense sensor data streams that factories already produce. We used a sophisticated approach to sample our training data, proving that these problems can be solved with large datasets already gathered and constructed from past manufacturing experience. Our actions were limited to the rate and volume of the extruded plastic, not leveraging the amorphic possibilities of 3D printing, but instead only prescribing changes to already established recipes for a part.

It is in this simple concept that AIPC derives its immense potential: by navigating the tempest of noisy, nonlinear data and filtering the essential features that act as harbingers of process variation, we are able to introduce deviations in manufacturing to correct the deviations previously observed.

Our research teams are currently working on deployed instances of this framework, in myriad contexts ranging from semiconductor to chemical to transportation manufacturing. We are extending the results of SPC by leveraging the existent network of sensors already built into factories to provide an information rich observation field for inferential agents to learn how to better control these processes through real-time adaptation. We cannot discard the fundamentals of SPC to improve manufacturing, and instead use AIPC to see the future of process control as it has never been seen before.

References:

Damas W. Limoge, et al. “Inferential Methods for Additive Manufacturing Feedback.” Proceedings of the American Control Conference 2020, Denver, Colorado, USA (27 Jul 2020). [DOI: 10.23919/ACC45564.2020.9147268]

Damas W. Limoge. Imaging Systems for Applied Reinforcement Learning Control. Embedded Vision Summit (Online), 2020. https://2020.embeddedvisionsummit.com/meetings/virtual/ZHsPquDDh7zGNhk7j

Damas Limoge

About the Author:

Damas Limoge is a Sr. R&D Engineer at Nanotronics, focusing on nonlinear system control and integration with computer vision and deep reinforcement learning algorithms. Previously, he was a student at Massachusetts Institute of Technology, achieving a master’s degree in mechanical engineering, focusing on adaptive control methods. He worked on advanced battery management systems using control-oriented modeling techniques, developing a novel adaptive observer for parameter estimation of multi-input, multi-output systems. Additionally, he has worked with MIT Lincoln Laboratory, Resolute Marine Energy, General Electric, and Goodrich in various mechanical engineering and simulation design roles.

Scott Ellyson, CEO of East West Manufacturing, brings decades of global manufacturing and supply chain leadership to the conversation. In this episode, he shares practical insights on scaling operations, navigating complexity, and building resilient manufacturing networks in an increasingly connected world.