Expand the UNS by including governed data transformations and unified access to historical data for deeper insights and simplified processes.

by Jeff Knepper, Managing Director, Flow Software Inc.

The Unified Namespace (UNS) is a powerful enabler of modern industrial architectures. By providing real-time data to subscribing applications through a single, centralized hub, the UNS simplifies data flow and makes valuable insights immediately available. This architecture ensures that every system can easily access the same data set without cumbersome point-to-point integrations. It is an essential building block in the journey toward digital transformation.

The data provided by a UNS represents the last known value—a snapshot of real-time conditions. Many manufacturing operations above Level 2 are not driven by the most recent real-time reading alone, these operations need to analyze data over longer durations—minutes, hours, shifts, or even months. Understanding patterns in machine behavior, material flows, and workforce efficiency requires more than real-time data; it requires comprehensive historical context.

When attempting to integrate historical data into a UNS based architecture it is likely you will evaluate the following options, each with their own challenges.

Point-To-Point Connectivity: Re-integrate applications with each of the historical data sources—historians, MES, ERP, maintenance systems, and others—resulting in the very complexity the UNS was meant to eliminate.

Enterprise Data Warehouse: Replicate all the historical data into a new system, such as a data lake or warehouse, which introduces duplication, a lack of data context, and long- term maintenance, cost, and overhead.

Database Registry: Build a federated database, a registry of all historical archives. This brings with it significant complexity in managing access, synchronization, and alignment between data sources.

Unfortunately, no matter which option you choose, there is an even larger issue that is waiting to confront you, the transformation of raw data into actionable information. To turn raw data into valuable information, a structured process must be undertaken. These steps are universal and have been followed for decades.

Identify the Business Value: Define the specific decision or insight the information will drive and understand how the information will impact business operations and outcomes.

Locate and Ingest Raw Data: Identify the raw data required, often spread across multiple systems and technologies. Ingest and join the data from these sources.

Standardize and Normalize Data: Data formats and time sampling needs standardized before then normalizing timestamps to enable row-based calculations.

Cleanse and Filter Data: Remove inaccuracies and inconsistencies, then filter data to ensure it accurately represents the sample or process being analyzed.

Identify Key Events: Those events relevant to the business use case selected, such as batch runs, shift/day/week periods, production milestones, safety incidents, or equipment failures.

Aggregate Data Around Events: Based on identified events, aggregate useful measures and indicators, then develop secondary aggregations to uncover trends, assess progress toward goals, and forecast future outcomes.

Validate Results: Validate the event periods and aggregated results to ensure accuracy and alignment with the intended business value, confirming that the insights are reliable and actionable.

Distribute Information: Distribute the information to stakeholders and systems.

The entire process above should reside in a governed framework. Historically however, it has been scattered across disparate tools—BI platforms, Excel sheets, reports, dashboards, and even personal notes on whiteboards—resulting in fragmented governance.

Governance is essential to ensure that the resulting information is not only accurate and reliable but also reusable and accessible across the organization. This is the business’s opportunity to create a trustworthy, information management strategy and build an environment that ensures that decisions are based on solid, consistent, and trustworthy information.

Since the UNS is not intended to address the need for historical data access and data transformation, it alone cannot provide a complete solution. Achieving this requires supplementing three, critical components:

Platform-Agnostic Information Model: This serves as a shared framework, independent of any specific platform (SCADA, DCS, historian, etc.), for defining how raw data is cleansed, aggregated, and measured. It ensures operations and business stakeholders collaborate effectively, transforming data into actionable insights that accurately reflect real-world processes.

Intelligent Calculation and Integration Engines: These engines execute the rules defined in the information model, processing, calculating, and transforming data to ensure consistency and accuracy. Built for dynamic manufacturing environments, they handle late or changing data, automatically recalculate when necessary, and publish data upon change or in batch to the UNS or other applications and databases as instructed.

Federated Database and Information Hub: This hub provides a single connection to access both raw data from underlying databases and calculated data from the engines. It serves data on demand or publishes it in formats compatible with other systems, ensuring seamless access to any historical record required.

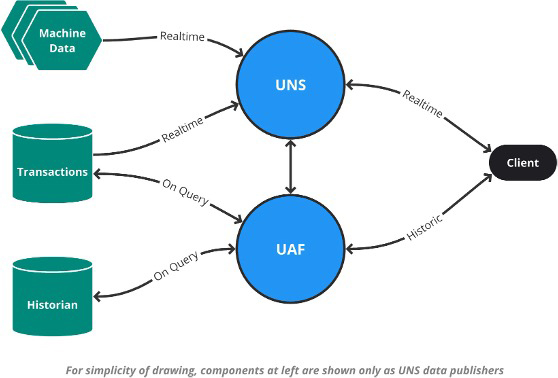

Together, these components are referred to as a Unified Analytics Framework, or UAF. By working in tandem, the UNS and UAF create a powerful, complementary architecture. The UNS provides real-time visibility and simplicity for current operations, while the UAF provides a central location to model, govern, and execute data transformation and becomes an archive of ready-to-use aggregated data and events.

By handling complex calculations and analytics externally, and publishing only relevant results to the UNS, the UAF maintains the simplicity of the real-time architecture while ensuring historical insights are readily accessible and easy to consume by all subscribers.

Further, the UNS + UAF approach reduces the risks of forcing historical data through a real- time system. While the UNS could technically handle historical data or recalculated metrics, doing so introduces unnecessary complexity—subscribers may expect only current values, and managing exceptions to distinguish old data from live updates adds development burden. The UAF avoids these challenges by providing access to historical records that remain in their original location without requiring duplication or data migration into new systems.

This federated approach enables a single endpoint to query historical data across multiple systems and returns data in a single, consistent format, even when these queries span different databases and structures (e.g., time series archives, relational databases, etc.).

The Unified Namespace is an important milestone in digital transformation. Alongside the UNS, organizations must simultaneously plan for accessing and utilizing historical data to uncover deeper insights. With the addition of a Unified Analytics Framework, businesses can extend the capabilities of the UNS, ensuring seamless access to real-time and historical data alike, all governed under a unified structure. This balance of agility and control is the key to unlocking the full potential of modern manufacturing systems.

About the Author

Jeff is a seasoned expert in data and analytics, clearly communicating value, and leading teams, with a career spanning over two decades across multiple industries including automotive, oil/gas, manufacturing, and utilities. As Managing Partner at Flow Software, Inc. and Co-Founder of IntegrateLive!, he applies his deep understanding of data-driven solutions to improve process metrics and efficiency. Known for his collaborative approach, Jeff is passionate about empowering others, constantly learning, and leveraging his expertise to help individuals and organizations achieve their goals.

Scott Ellyson, CEO of East West Manufacturing, brings decades of global manufacturing and supply chain leadership to the conversation. In this episode, he shares practical insights on scaling operations, navigating complexity, and building resilient manufacturing networks in an increasingly connected world.